🧑🏻💻 주요 정리

NLP

Word Embedding

Word2Vec

Word2Vec

Training of Word2Vec 방식은 많이 나오는 것을 자주 학습한다는 것에 초점을 뒀습니다.

-> 자주 등장하는 단어는 더 높은 가능성으로 업데이트가 이루어집니다.-> 단어들을 확률과 함게 설정합니다.

위 그림과 같이, 빈도수가 낮아질 수록, 드랍할 확률이 작아집니다.

그리고, 빈도수가 높아질 수록, 1에서 빠지는 수가 감소하므로, 드랍할 확률이 높아집니다.

Negative Sampling

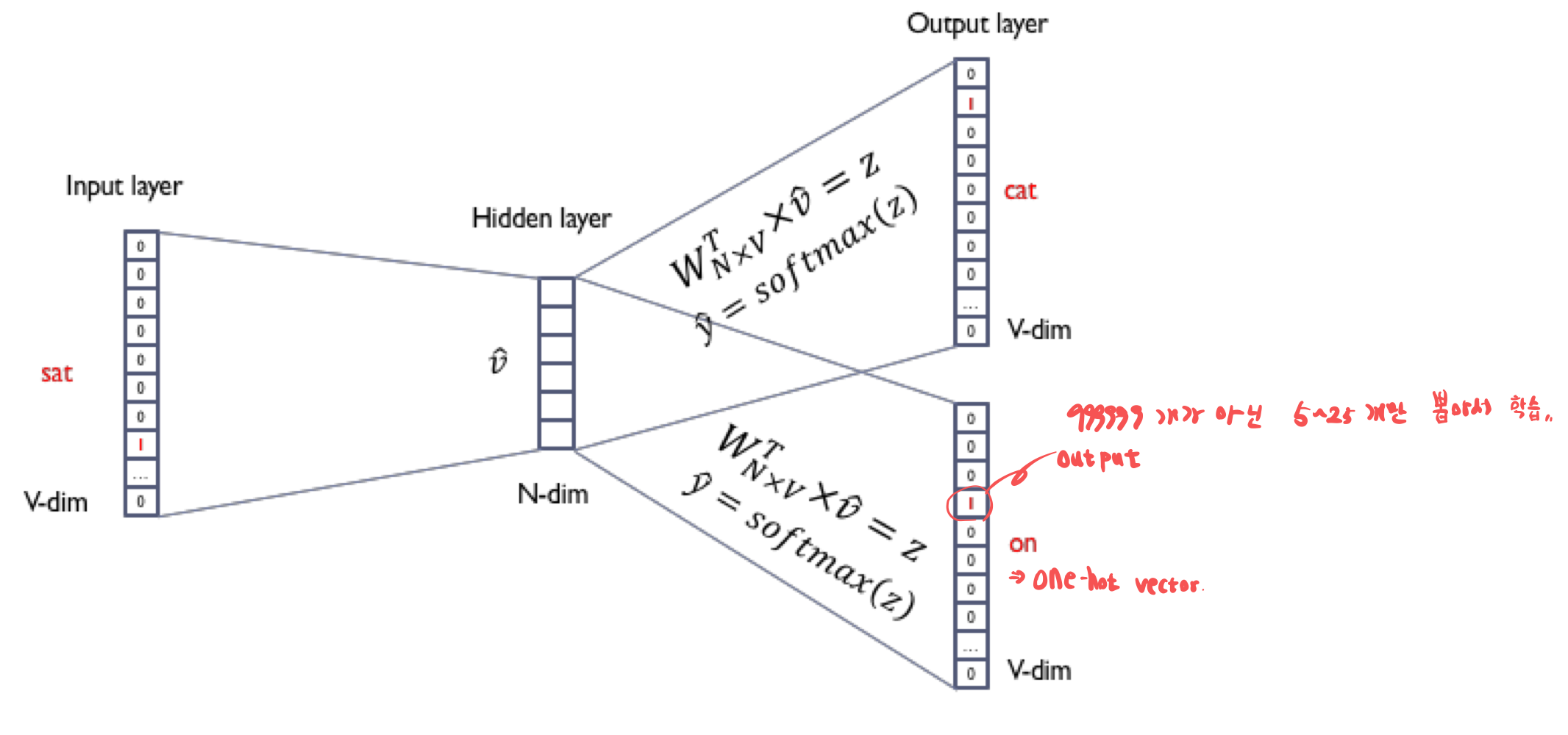

아래와 같은 skip-gram의 형태를 보겠습니다.

아래 그림에서 문장에서 cat을 통해 4가지 단어를 output으로 냅니다.

여기서 negative sampling은,

input과 output이었던 text들을 모두 입력으로 받아서 해당 값들이 가까이 있을 확률을 출력으로 뱉어냅니다.

이때 Activation function은 softmax function을 사용합니다.

이를 Skip-gram(Skip-Gram with Negative Sampling, SGNS), SGNS라고 부릅니다.

그리고, 여기서 레이블을 오른쪽과 같이 바꿉니다.

그리고 레이블이 1인 레이블을 하고, 이제 레이블이 0인 샘플들을 위와 같이 넣습니다.

이제 이 데이터셋은 입력1과 입력2가 실제로 윈도우 크기 내에서 이웃 관계인 경우에는 레이블이 1, 아닌 경우에는 레이블이 0인 데이터셋이 됩니다.

그리고 위와 같이 negative sampling의 결과를 볼 수 있습니다.

Negative sampling은 너무나도 큰 V의 크기에 대해서 softmax function을 실행하는 데는 너무나도 많은 시간이 걸려 이를 해결하기 위해 나오게 되었습니다.

랜덤하게 5 ~. 5개의 negative samples들을 뽑습니다.

그리고, 선택된 단어에서 softmax를 계산한고, 각각의 words에 대한 확률을 계산합니다.

위와 같이 Word2Vec를 학습시킬 수 있습니다.

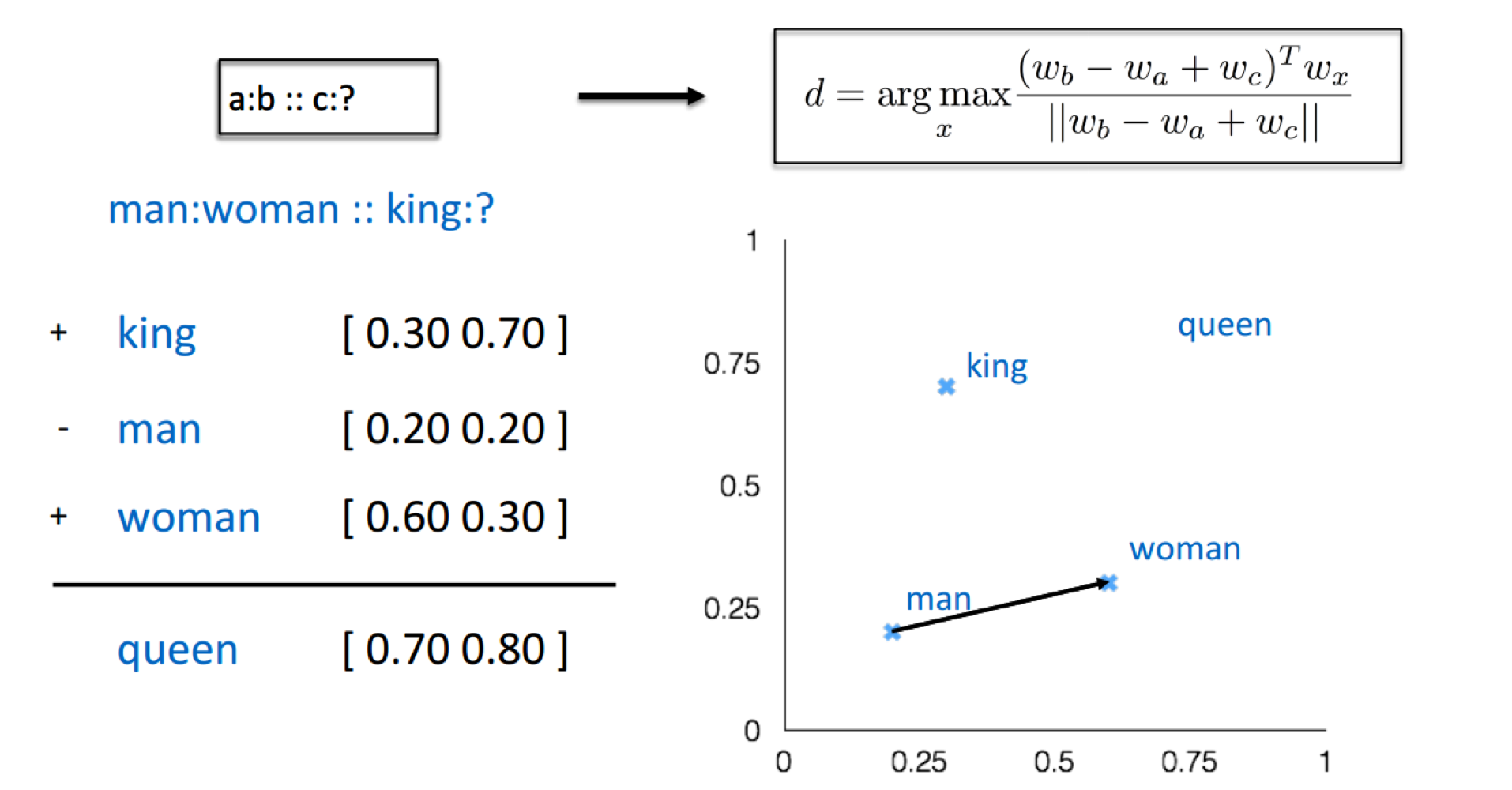

Word Analogies

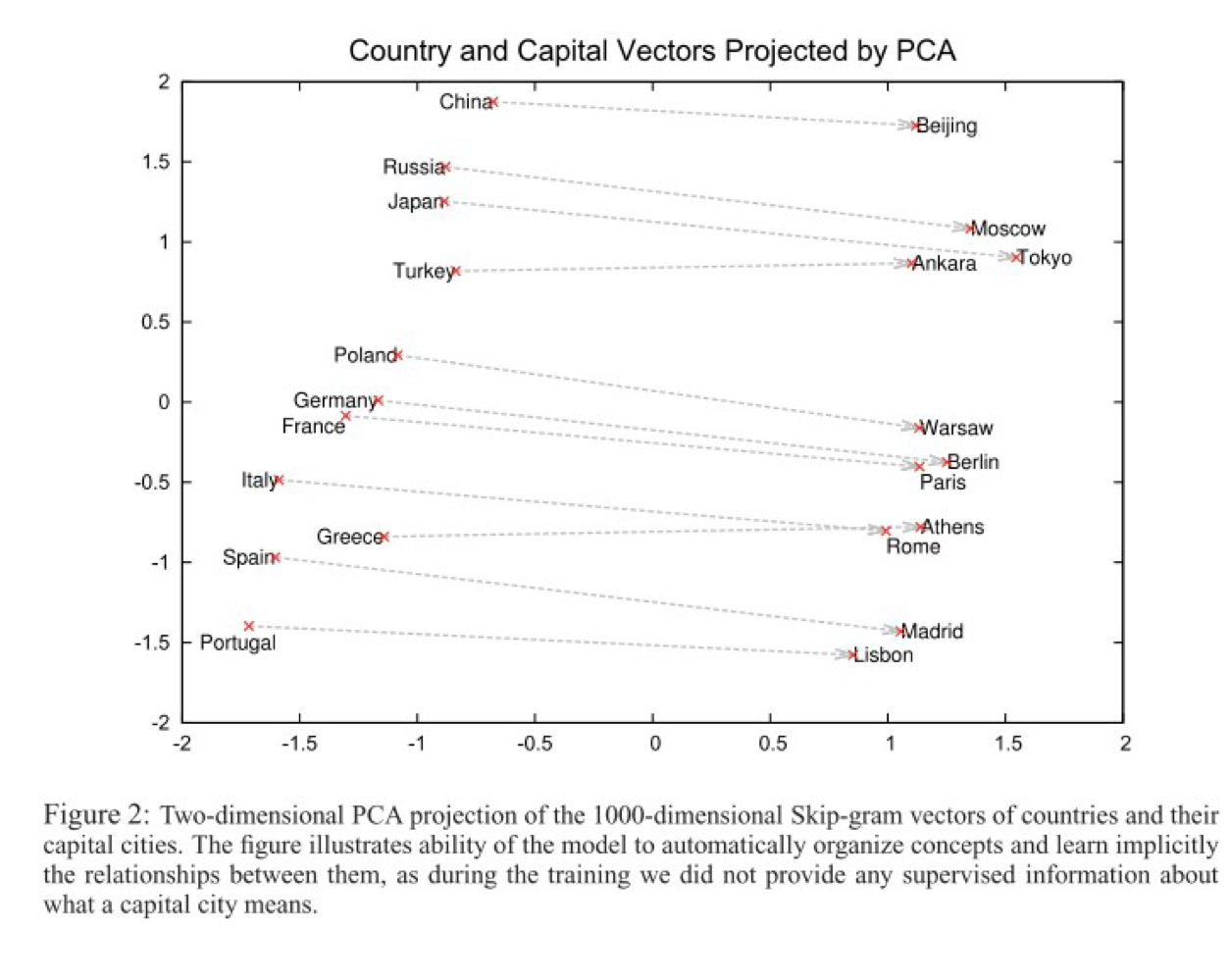

이제 실제로, 단어를 벡터간의 계산으로 얼마나 일치하는 단어를 뽑을 수 있는 가를 보겠습니다.

vec(“Berlin”) - vec(“Germany”) + vec(“France”)위 문장의 결과는 무엇이 될까요?

비슷한 예시로 아래 그림과 같이 볼 수 있습니다.

드디어 단어를 벡터로 변환하여 직접적인 수치를 추출하여 원하는 결과를 얻을 수 있게 되었습니다.

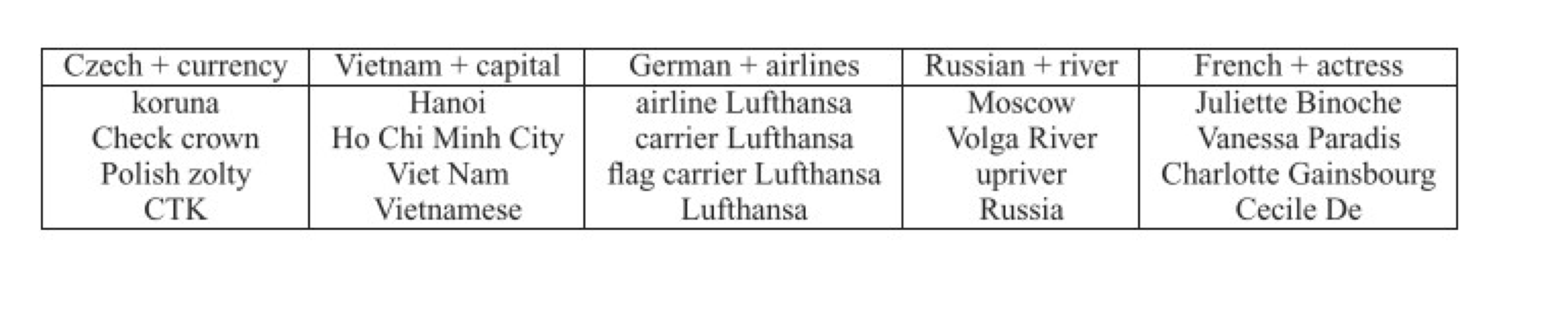

Additive Compositionality

Additive Compositionality can meaningfully combine vectors with termwise addition

그리고 숫자 표현으로 다음과 같은 것들을 얻을 수 있었습니다.

- Word Vectors

- Word vectors in linear relationship with softmax nonlinearity

- Vectors represent distribution of context in which word appears

- Vectors are logarithmically related to probabilities

- Sum of word vectors

- Sums correspond to products.

- Product of context distributions

- ANDing together the two words in the sum.

'Artificial Intelligence > Natural Language Processing' 카테고리의 다른 글

| [NLP] Word Embedding - GloVe (0) | 2023.03.31 |

|---|---|

| [NLP] Word Embedding - CBOW and Skip-Gram (2) | 2023.03.27 |

| [NLP] Word Embedding - Skip Gram (0) | 2023.03.27 |

| [NLP] Word Embedding - CBOW (1) | 2023.03.27 |

| [NLP] Introduction to Word Embedding (0) | 2023.03.26 |