🧑🏻💻 주요 정리

NLP

Word Embedding

GloVe

이번에는 GloVe에 대해 알아보겠습니다.

통계 기반의 Word2Vec라는 개념을 가지고 이해해주시기 바랍니다.

Word2Vec는 Softmax regression 을 이용하여 단어적 의미의 유사성을 보존하여, 비슷한 semantic을 가지면 비슷한 vector를 갖도록 합니다.

Word2Vec 은 context words distribution 이 비슷한 두 단어가 비슷한 임베딩 벡터를 지니도록 학습함과 동시에, co-occurrence 가 높은 단어들이 비슷한 임베딩 벡터를 지니도록 학습합니다.

위 내용을 참고로, GloVe와 Word2Vec의 차이를 잘 살펴보기 바랍니다.

Introduction to GloVe

Word2Vec 은 하나의 기준 단어의 단어 벡터로 문맥 단어의 벡터를 예측하는 모델입니다.

GloVe 의 단어 벡터 학습 방식은 이와 비슷하면서도 다릅니다. Co-occurrence 가 있는 두 단어의 단어 벡터를 이용하여 co-occurrence 값을 예측하는 regression 문제를 풉니다.

- Latent Semantic Analysis

- Pro : efficiently leverage statistical information

- Con : relatively poor on the word analogy task

- Word2Vec

- Pro : do better on the analogy ask

- Con : poorly utilize the statistics of the corpus

- They focus on local context windows instead of on global co-occurrence counts.

이 사실을 바탕으로,

기존의 것들인 Word2Vec는 빈도수를 반영하지 않지만, GloVe에서는 빈도수를 반영하여 emvedding을 구현하겠다는 것입니다.

즉, frequency 기반의 학습입니다.

아이디어는 다음과 같습니다.

💡 Basic idea : The inner products of the embeddings of two words needs to be close to their co-occurring frequencies.

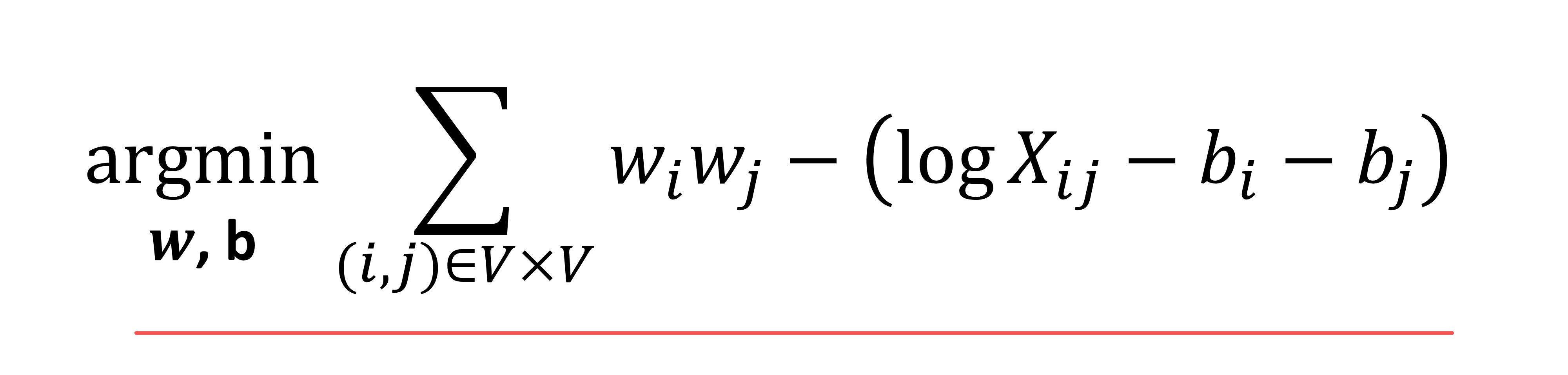

위와 같은 formula를 가집니다.

vector에 weight인 w와의 곱과 bias인 b를 더한 값이 co-ouccrence의 log와 비슷해지도록 weight와 bias를 학습합니다.

즉, 각각이 같이 나올 확률을 구하여 dataset에서 해당 확률들을 구하는 것입니다.

우리는 결국,

embedding을 작게 하는 값을 찾아야합니다.

단어는 vector로 embedding한 값이 들어가게 됩니다.

위 수식에서 weight를 보면,

과정은 이러합니다.

이를 통해 우리는 다음의 식을 얻습니다.

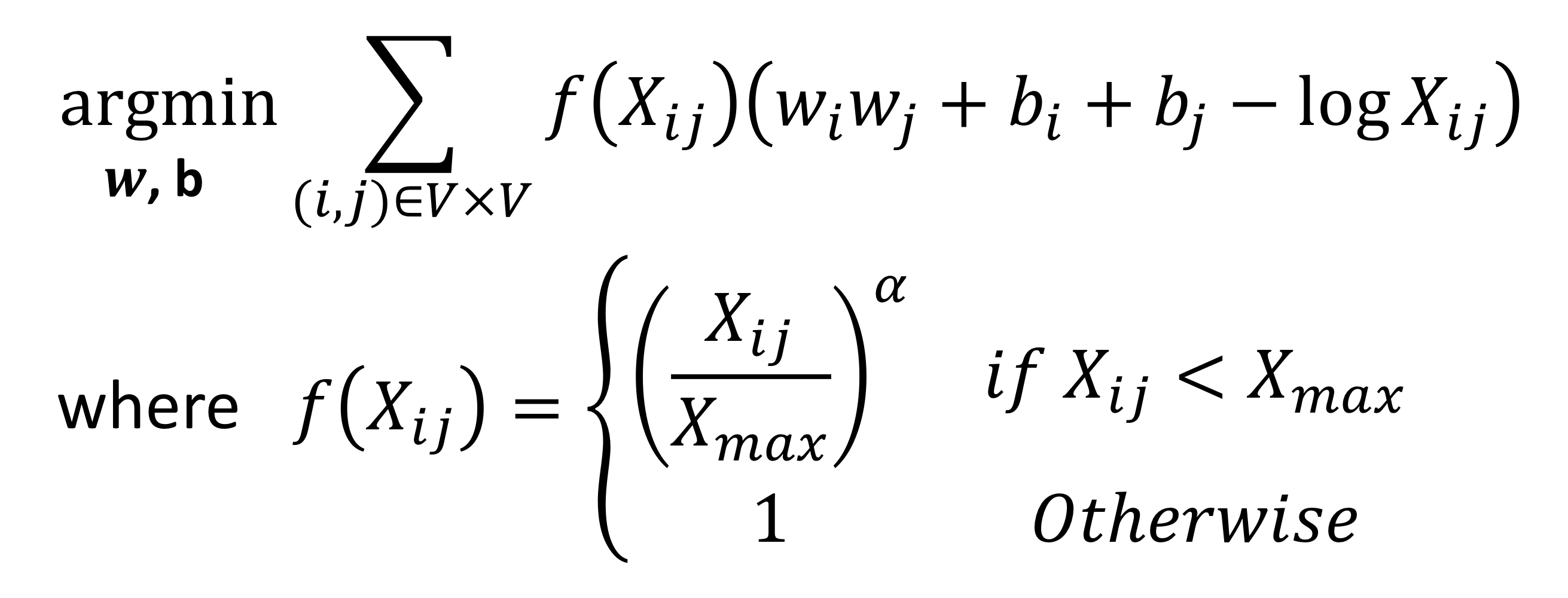

그리고, 최종적으로 정리하면 다음과 같습니다.

여기서 우리는 high frequency words의 frequencies update를 줄이기 위해 위와 같이 합니다.

수식으로부터 우리가 알아야 할 것은, 동시에 발생하는 frequency를 반영하여 학습한다는 개념을 이해하는 것이 중요합니다.

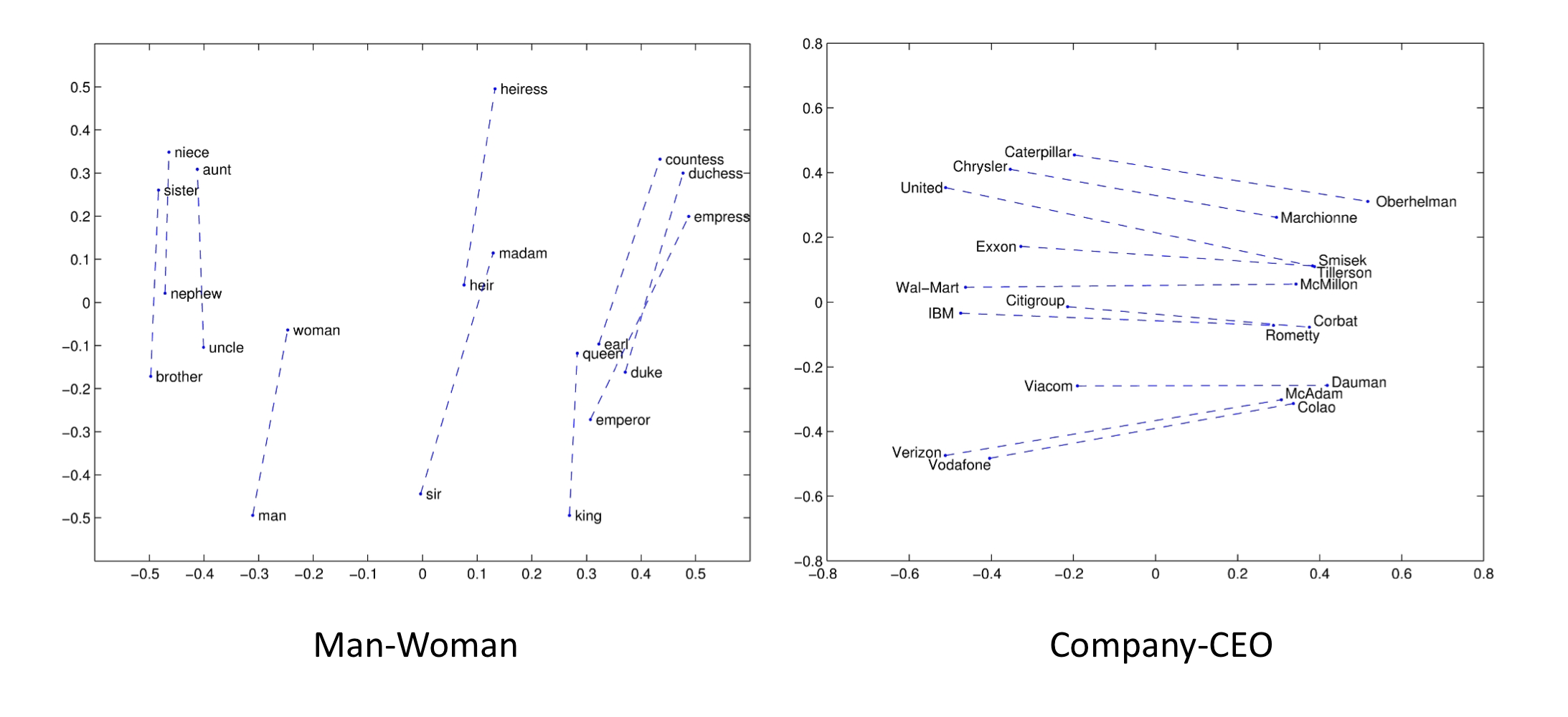

GloVe를 통해 학습한 결과는 다음과 같습니다.

'Artificial Intelligence > Natural Language Processing' 카테고리의 다른 글

| [NLP] RNN (0) | 2023.04.04 |

|---|---|

| [NLP] Word Embedding - GloVe [practice] (0) | 2023.03.31 |

| [NLP] Word Embedding - CBOW and Skip-Gram (2) | 2023.03.27 |

| [NLP] Word Embedding - Word2Vec (0) | 2023.03.27 |

| [NLP] Word Embedding - Skip Gram (0) | 2023.03.27 |