🧑🏻💻용어 정리

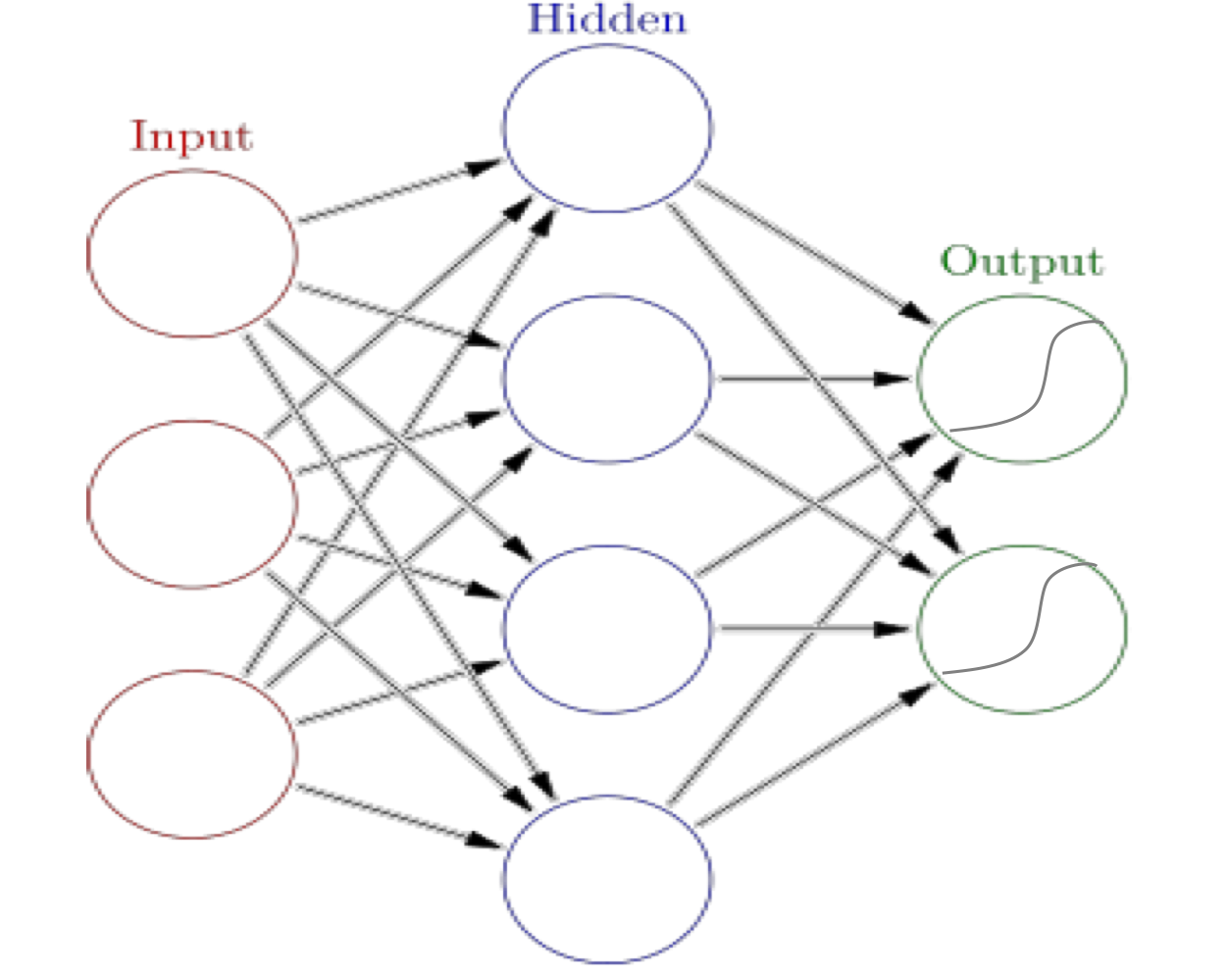

Neural Networks

Feed-forward

Backpropagation

Feed-forward

Network inputs이 Input layer를 거치고 여기서 계산을 통해 Hidden layer로 output이 전달되어 input으로 전달되고, 또 hidden layer에서 이루어진 연산의 결과가 output layer에 전달되어 network output으로 최종 전달됩니다.

그리고, 각각의 모든 input에 대하여 hidden에 모두 연산되어, 각각의 weight로 연산되어 들어가게 됩니다.

서로 다른 weight라는 것입니다.

i -> j : Wji

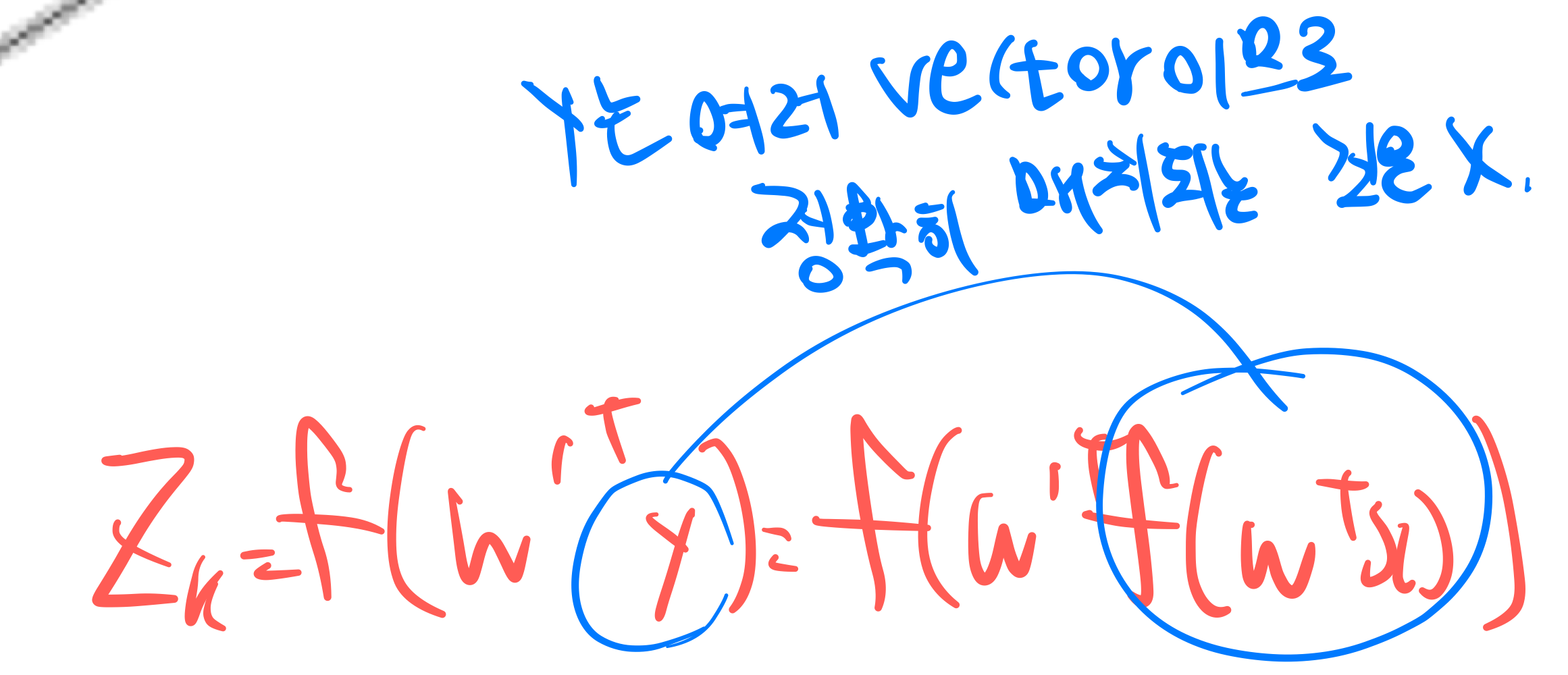

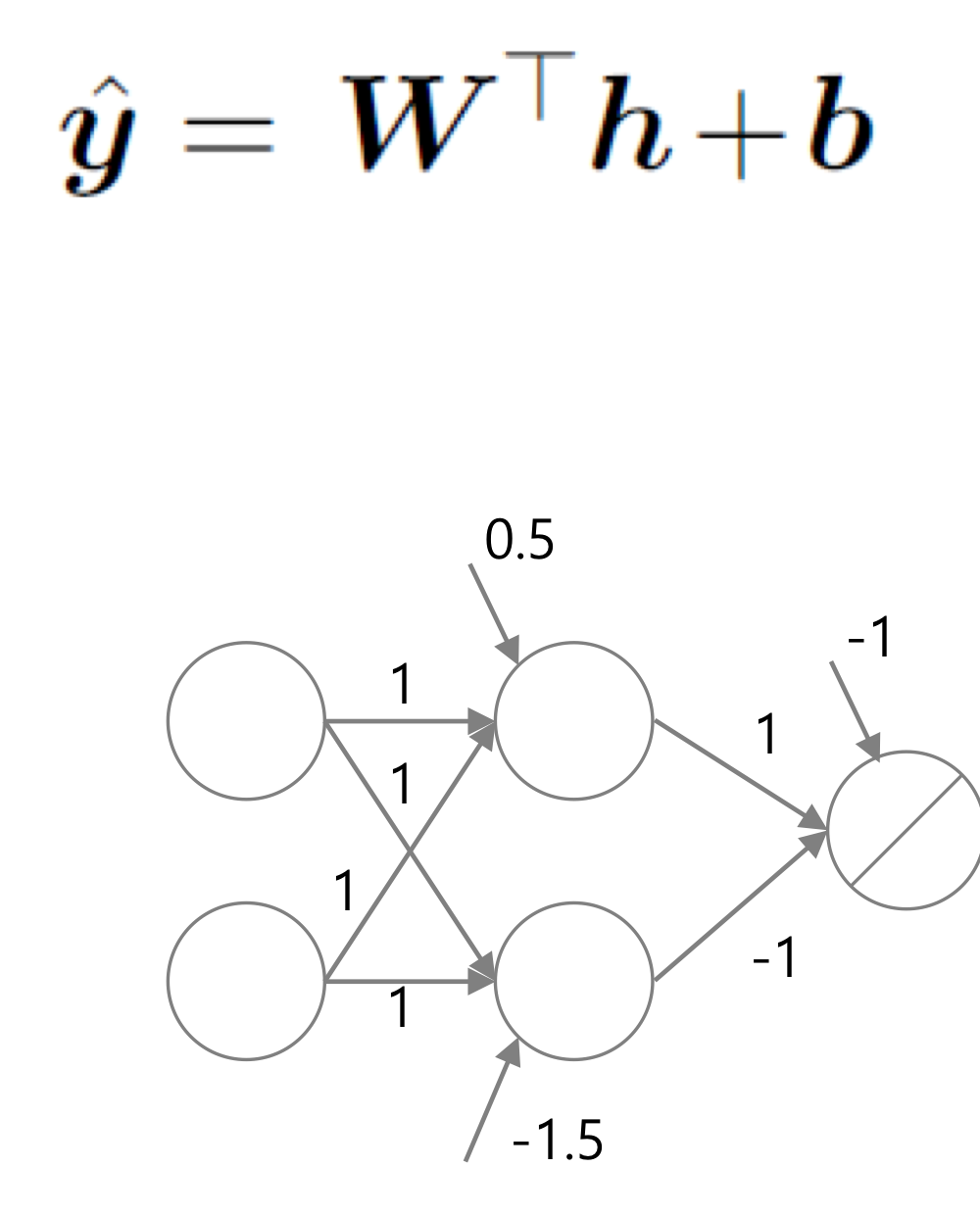

위 필기와 같이 우리는 연산은 같아 보이지만 W^T의 값인 Weight vector는 다르게 구성되어 있습니다.

이 과정은 input -> hidden 입니다.

그리고,

위와 같이 hidden -> output의 과정입니다.

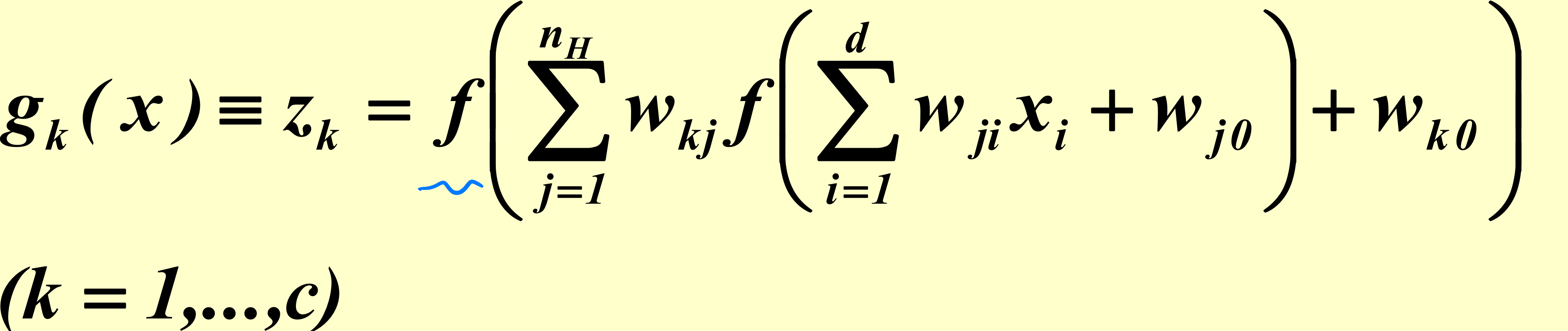

위 과정을 통합하여,

이렇게 정리할 수 있습니다.

그러나, Y는 vector로 여러 결과 값을 가지고 있을 수 있기 때문에 정확히 매치되는 수식은 아닙니다.

그저 느낌적으로 알고 넘어가면 될 것 같습니다.

중요한 것은 input의 정보가 layer를 거쳐 계산되어 나오는 정보가 계속해서 "앞으로" 전달된다는 것입니다.

Network이 multi layer를 쌓았다는 것은, 함수 계산들이 계속 중첩되어 이루어진다고 볼 수 있습니다.

즉, 함수의 결과를 가지고 다시 함수를 계산하는 것이지요.

그럼 수식을 더 자세히 살펴볼까요?

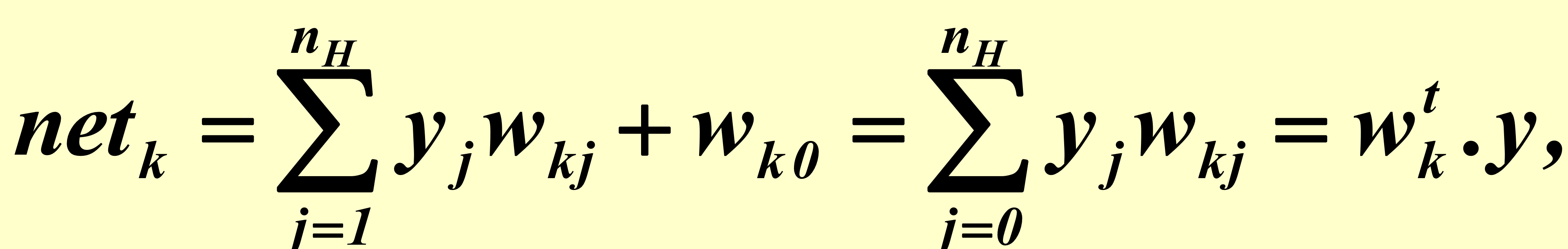

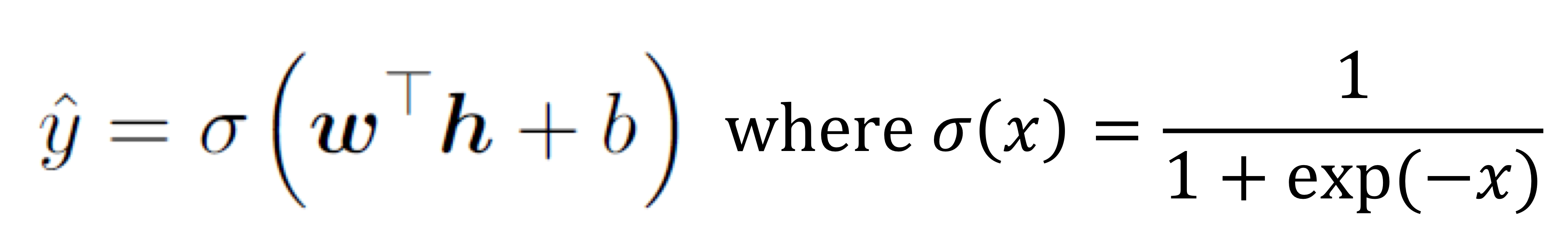

아래 수식은 hiddden to output을 나타냅니다.

net은 그저 network 계산값을 의미합니다.

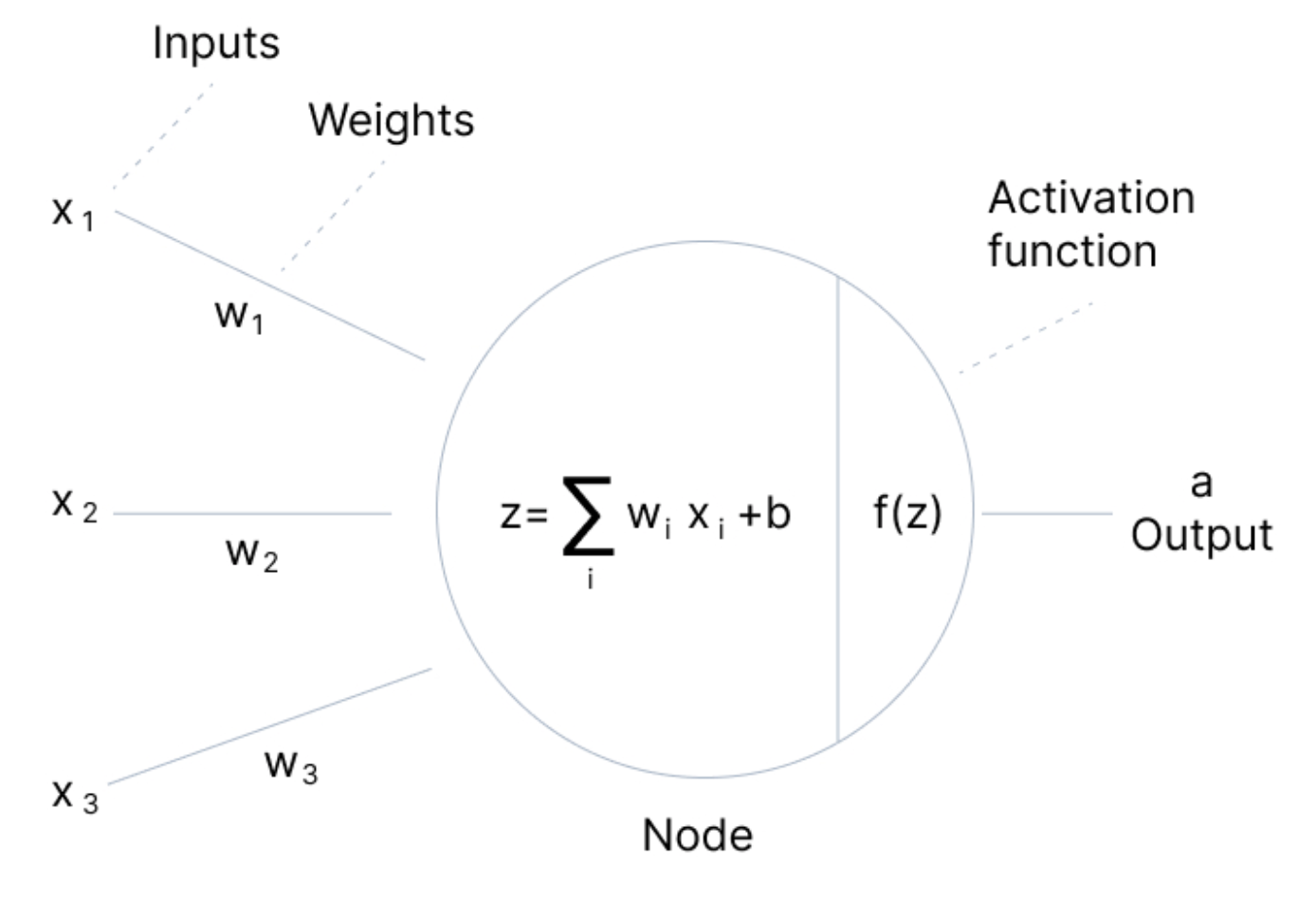

우리는 layer에서 아래와 같이 summation과 Activation part를 가집니다.

그래서 summation까지 진행된 부분을 net이라고 칭합니다.

여기서 activation까지 되어야 해당 layer의 output vector에 포함되는 것입니다.

즉, net의 값은 최종 output이 아닙니다.

net i == y i가 아니라는 것입니다.

activation function을 통과해야 y i 가 될 수 있습니다.

따라서,

f(net i) = y i입니다 !

이 수식은 input to output을 나타냅니다.

결국 중요한 것은,

이 feedforward process가 순서대로 진행되며, layer의 output을 그 다음 layer의 input으로 쓴다는 것입니다.

그리고 항상 함수가 중첩됩니다.

자 이렇게 봤을 때,

MLP가 존재할 때,

잘 구해진 Weight에 대해서 input data를 받아서 feedforward 처리를 해서 우리가 원하는 예측 출력 값을 얻어낼 수 있겠다고 볼 수 있습니다.

그렇다면 MLP를 구성하기 위해 우리가 해야할 것은 무엇일까요?

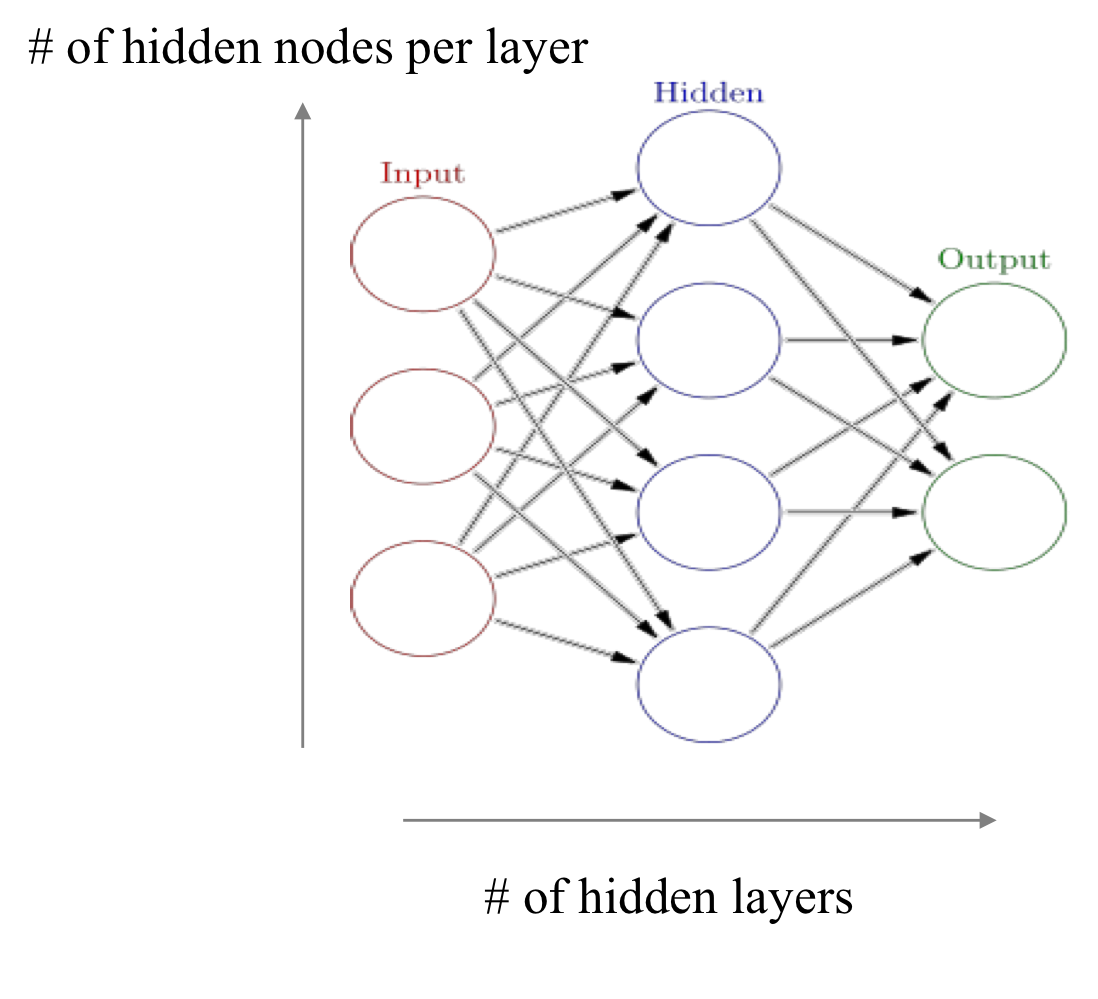

먼저 위 그림과 같이 Neural Net이 생겼습니다.

Weight는 학습하며 구해지는 것이고,

우리가 결정해야할 것은,

input node의 개수는 데이터에 의해, feature에 의해 결정되므로 이미 정해져 있는 값이다.

그리고, Output node의 개수는 우리가 풀고자하는 문제에 따라 달라집니다.

Classification, Regression, .... 등 경우에 따라 달라집니다.

결국 우리가 정해야 하는 것은,

Hidden Layer의 개수와 Hidden Node의 개수입니다.

각각을 우리는 depth와 width라고 합니다.

그리고 이것은 문제에 따라 정의되어 있는 것이 아닌, 사람이 정해줘야 합니다.

따라서, hidden layer, hidden node를 많이 쓸 수록 capacity를 더 많이 우리 모델에게 부여한다는 것입니다.

그래서 너무 많은 capacity를 갖는다면 overfitting할 가능성이 높아집니다.

이 모델은 일반화를 잘 하는 모델이라기 보다는 그저, trainging 데이터를 다 외워버리는 효과가 나타나게 됩니다.

여기서 적당히 잘 하도록 설정하는 것은,

Regularlization, Generalization, .. 등등의 것들을 통해서 얻어집니다.

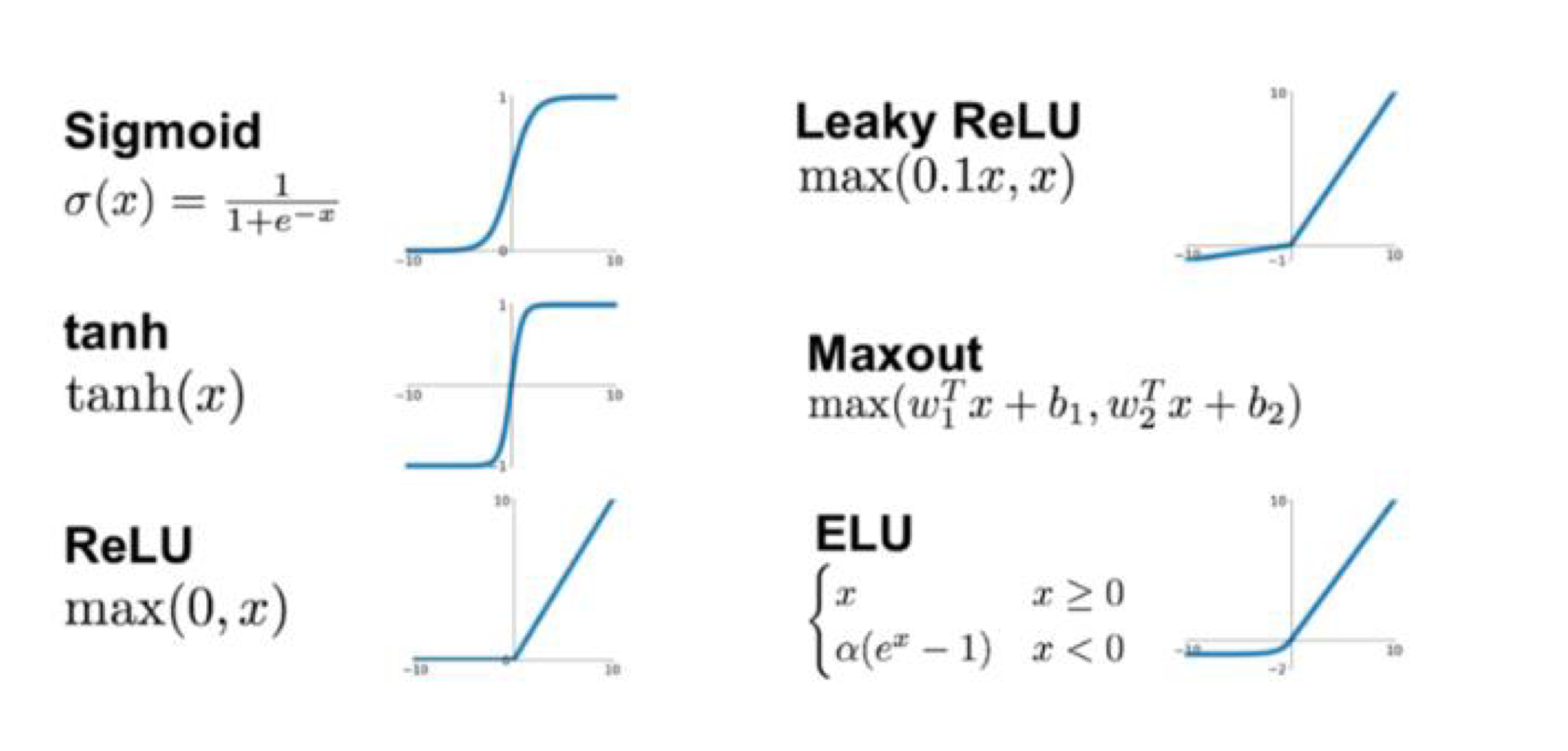

Activation Function

그 다음 결정해야할 것은 Activation function을 어떤 것으로 써야하나입니다.

현재 ReLU와 ReLU의 친구들이 거의 Default입니다.

그리고 hyperblic tan은 아직 RNN 계열에서 많이 사용됩니다.

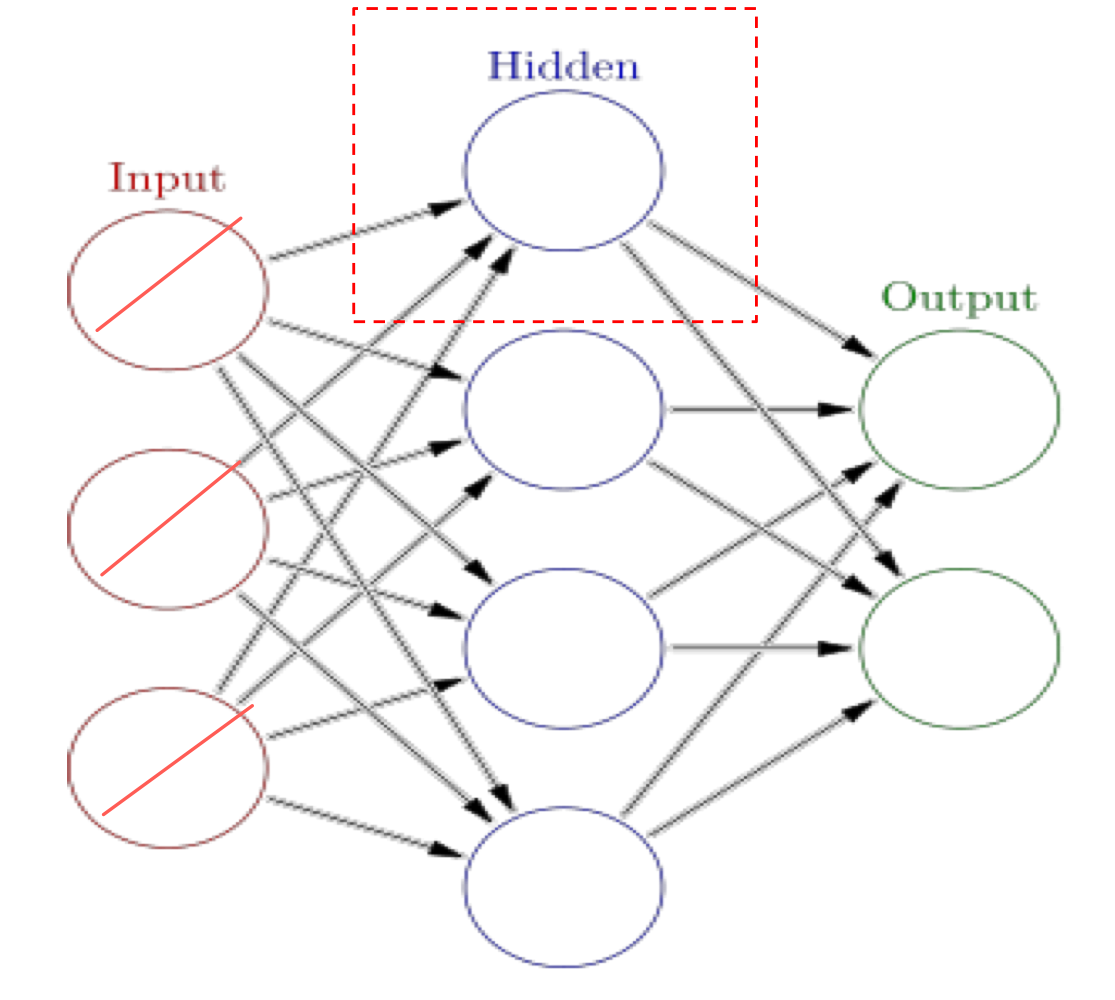

위 그림과 같이 input layer에 있는 input node들에 y = x function 형태의 선을 그어, 여기에는 Activation function이 존재하지 않는 다는 것을 알렸습니다.

그리고, Hidden layer와 Output layer에 있어서, 각각 Activation function의 역할이 다릅니다.

Role of activation function in hidden unit

- 만약 signal, 즉, net j가 충분히 크다면, hidden unit은 이것을 큰 값으로 내보냅니다.

- 그러나 작다면, 작은 값으로 내보냅니다.

- 그리고 nonlinear transformation을 합니다. hidden은 linear하지 않습니다.

Role of activation function in output unit

- 우리가 어떤 문제를 갖고있냐에 따라 output node의 형태가 달라집니다.

- 2-classification 에서는 당연히 logistic 형태의 sigmoid units을 사용합니다.

- 2-classification 이므로 output node는 하나만 있으면 됩니다.

- 첫 번째 or 두 번째 class가 될 확률을 구하는 것이기 때문입니다.

- 그리고, 아래와 같이 Output을 표현합니다.

- 우리가 어떤 문제를 갖고있냐에 따라 output node의 형태가 달라집니다.

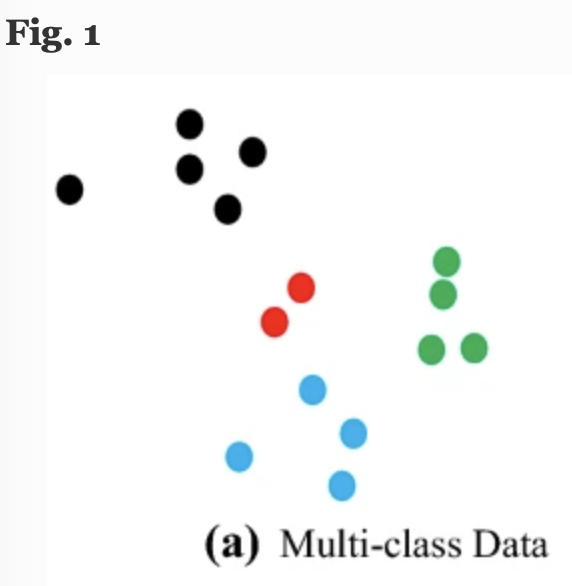

- Multi-class classification에서는 이야기가 달라집니다. 여기선 3 이상의 class를 나눠야 하는 문제가 나옵니다.

- 보통 분류하고자 하는 개수로 Output을 정합니다.

- 그리고 Activation function으로는 softmax function을 사용하고, one-hot encoding을 사용합니다.

- 그리고 나온 class에 대한 각각의 확률 값에 있어서, logistic을 사용하면 값들의 합이 1이 아니게 됩니다.

- 결국 logistic을 씌웠을 때, 각각을 보면 확률값처럼 보이지만, 전체적인 관점에서는 확률값이 아니게 됩니다.

- 그래서 softmax를 사용합니다.

- 우리가 어떤 문제를 갖고있냐에 따라 output node의 형태가 달라집니다.

- 우리는 regression 문제를 풀 수 있습니다.

- 앞서 보았던 classification 에서는 continuous 한 값들을 probability로 바꿔줄 필요가 있었습니다.

- 그러나 regression에서는 값 그 자체가 필요하기 때문에 Activation function이 필요하지 않습니다.

- y = net의 형태로 그저 summation을 대입해서 해결합니다.

- 이는 어떤 function도 거치지 않고 그대로 output을 내놓는 것을 말합니다.

- 그리고 output이 여러 개일 필요가 없으며, linear units을 아래 사진과 같이 배치합니다.

- 그러므로 이 linear units은 output node activation function이 되지만, 사실상 그대로 값이 나오는 것에 불과합니다.

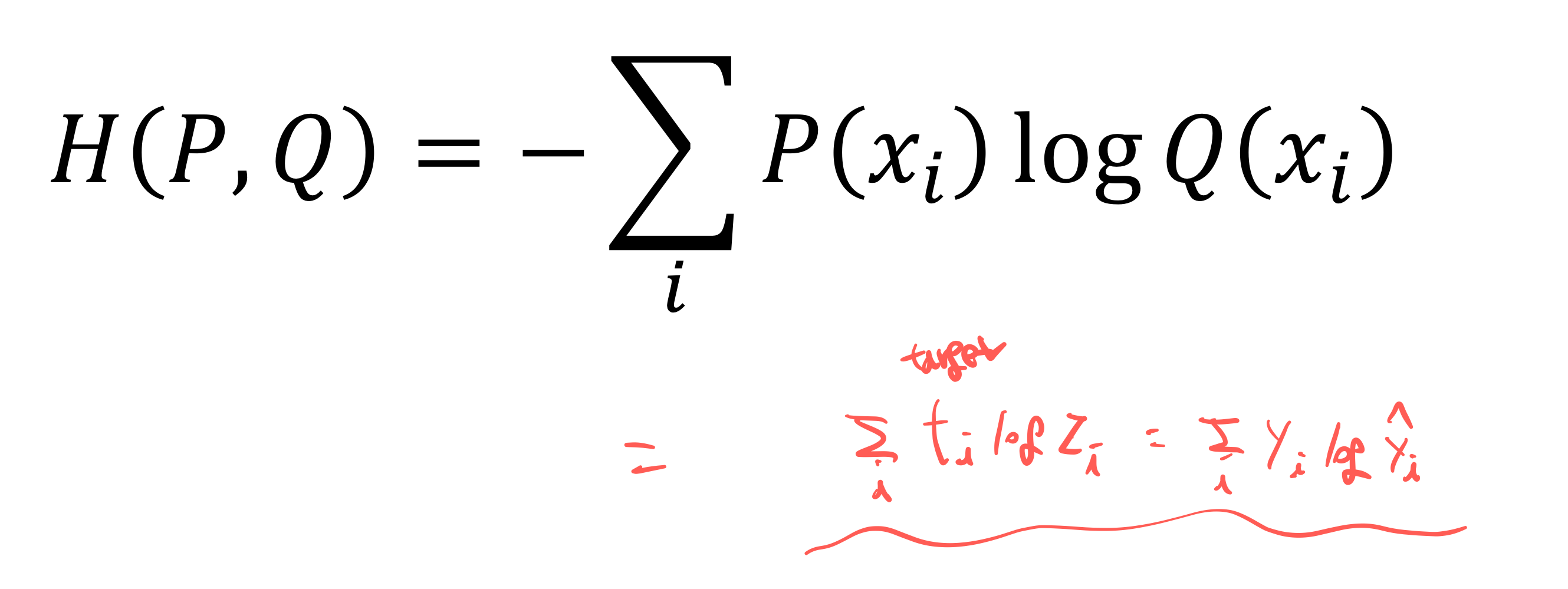

자, 그 다음으로 필요한 것이 어떠한 Loss Function을 사용할 것인가 입니다.

Loss Function

위에서 살펴본 Classification, Regression 등등의 수행이 얼마나 잘 이루어졌는지를 확인하기 위해서 Loss Function을 계산할 차례입니다.

우리 Network이 얼마나 진실만을 이야기 하는지. 얼마나 정답을 이야기 하는지.

먼저,

Classification 에서는 Cross-entropy를 사용합니다.

이를 통해 우리가 이 값을 최소화 하는 방향을 사용합니다.

그리고,

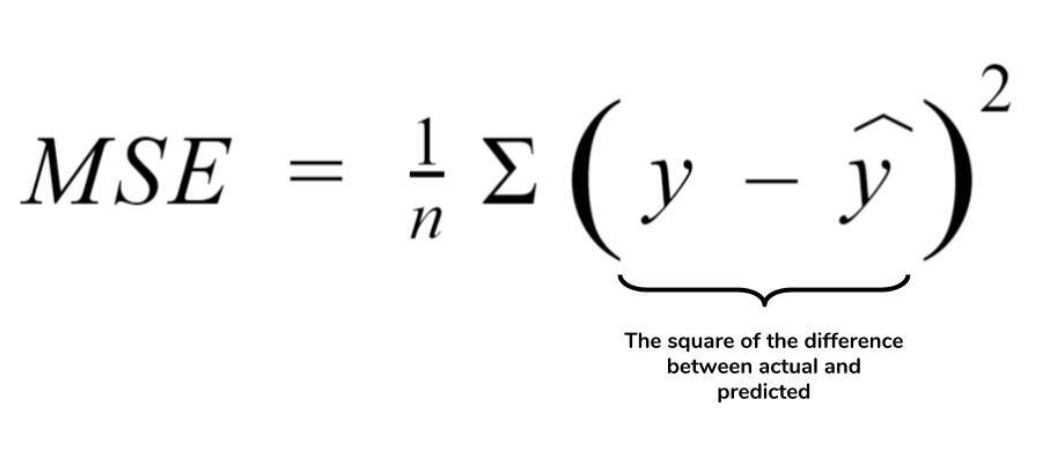

Regression 에서는 MSE인 Mean Squared Error를 사용합니다.

이 값은 미분 가능하기 때문에 후속 조치에 능합니다.

위와 같이 정리할 수 있습니다.

'Artificial Intelligence > Deep Learning' 카테고리의 다른 글

| [Deep Learning] Backpropagation in special Functions (0) | 2023.04.03 |

|---|---|

| [Deep Learning] Backpropagation (0) | 2023.03.30 |

| [Deep Learning] - MLP(Multilayer Perceptron) (0) | 2023.03.26 |

| [Deep Learning] - Neural Networks (0) | 2023.03.26 |

| [Supervised Learning] 지도 학습 (0) | 2023.02.08 |