🧑🏻💻용어 정리

Neural Networks

RNN

LSTM

Attention

RNN은 sequence 정보를 학습하고자 하는데,

뒤로 갈수록 앞에 학습한 것들을 잘 잊어버리는 "Long Term Dependency" 문제가 존재합니다.

그래서 LSTM과 GRU가 나왔습니다.

다음과 같이 살펴봅시다.

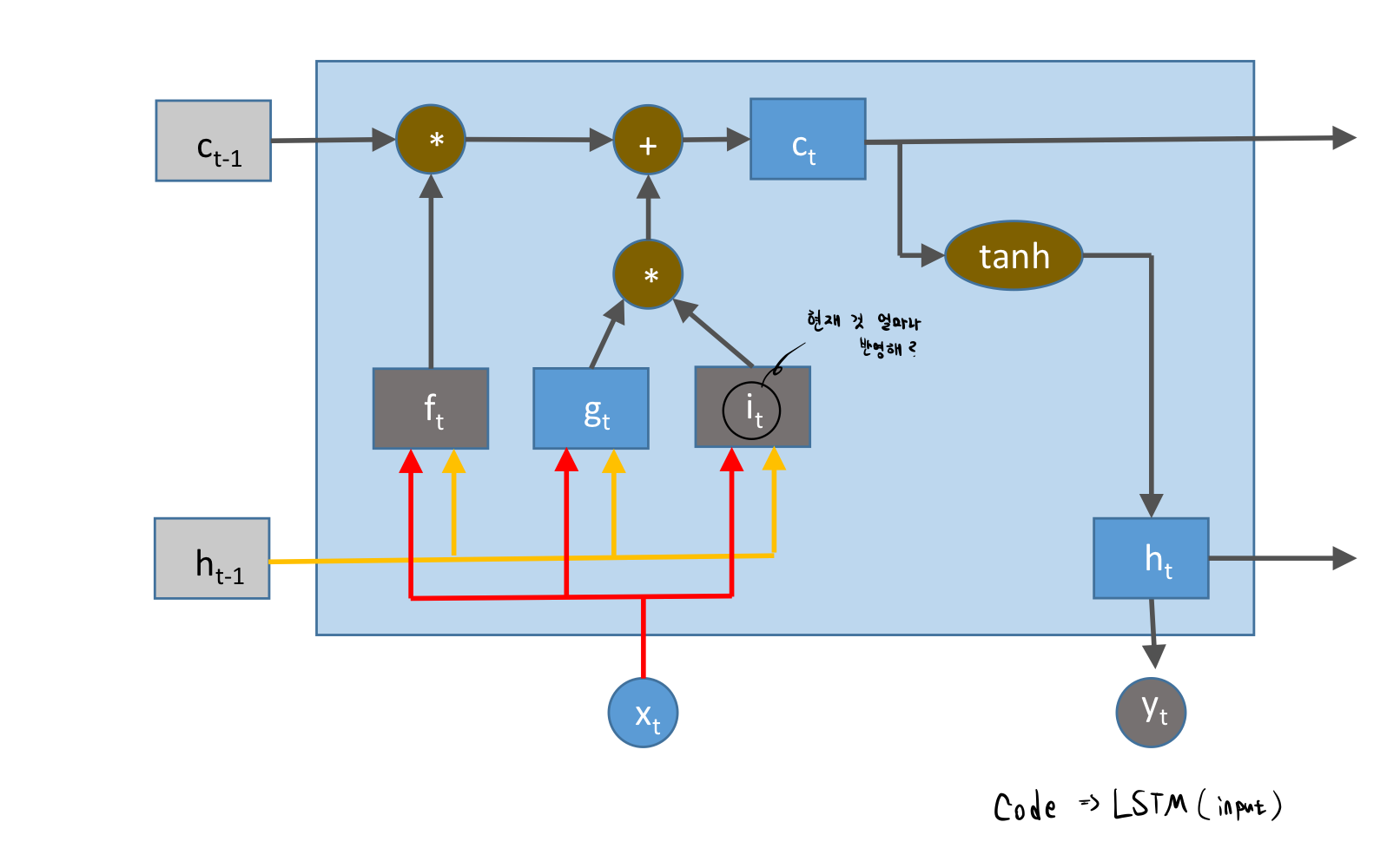

Long Short-Term Memory (LSTM)

- Capable of learning long-term dependencies.

- LSTM networks introduce a new structure called a memory cell.

- An LSTM can learn to bridge time intervals in excess of 1000 steps.

- Gate units that learn to open and close access to the past

- input gate

- forget gate

- output gate

- neuron with a self-recurrent

- LSTM networks introduce a new structure called a memory cell.

자 여기서 더 살펴봅시다.

여러 가지 방법들이 추가되었습니다.

여기서 계산된 것이, 현재 단어보다 더 중요한, 더 많이 반영해야하는 부분이 있다면 그것을 더 반영해보자 !

라는 아이디어에서 gate들이 추가되었습니다.

- input gate는

- 새로운 input이 들어왔을 때, 지금의 값을 얼마나 반영해야해?에 대한 것입니다.

- 그리고, forget gate는

- s t-1를 그대로 반영할지, 아니면 어느정도만 반영할지를 결정해보자!입니다.

- output gate는

- 최종적으로 계산된 g 값을 얼마나 사용할지입니다.

그리고, RNN과 차이점은

internal memory인 c t와 hidden state인 s t가 있습니다.

둘 다 같은 hidden state이지만, s t는 잊을 거는 잊고 새로운 것을 얻을 거면 얻어라. 복잡한 연산.

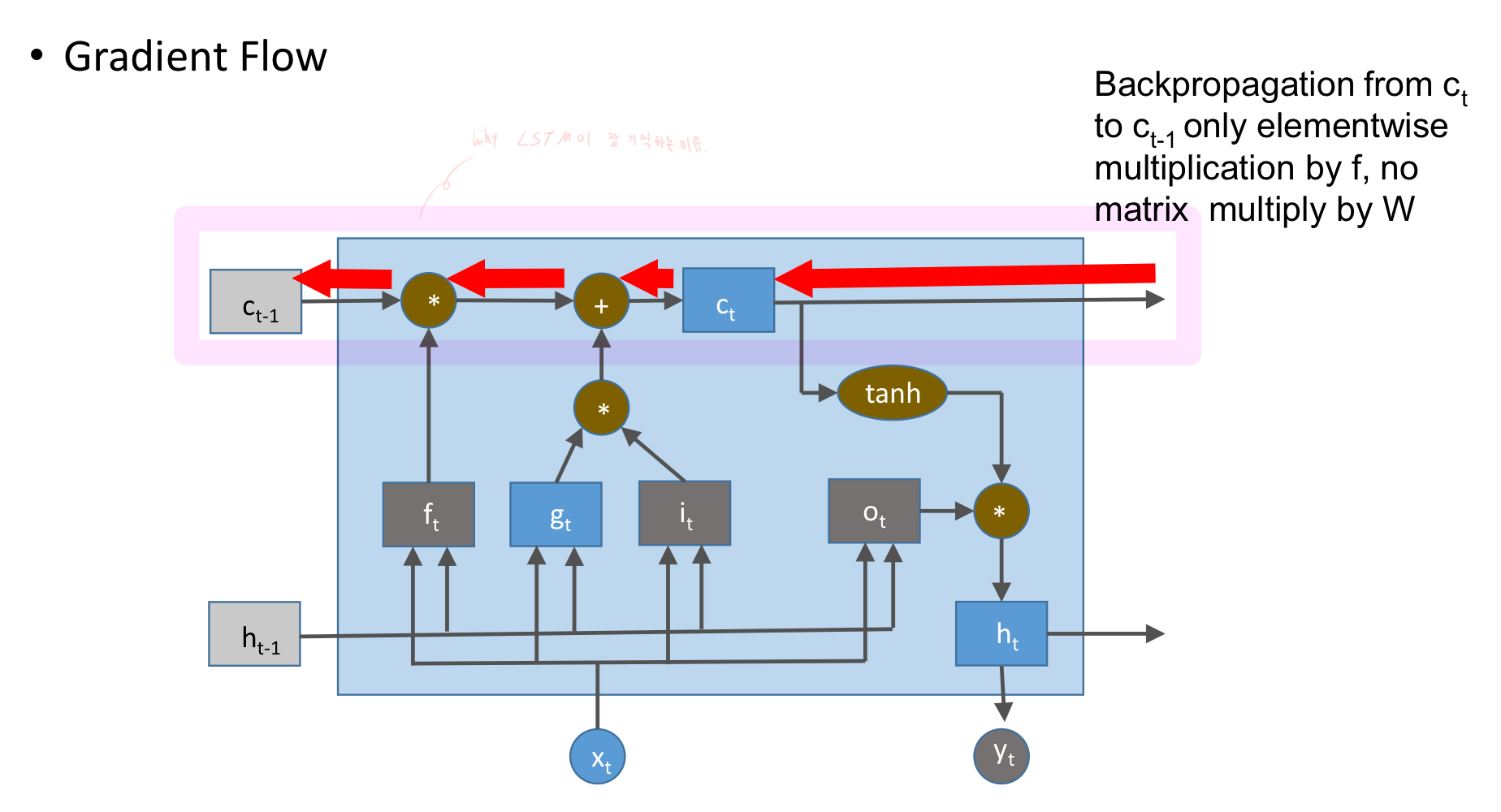

c t는 과거를 잘 기억하기 위한 단순한 연산.

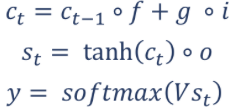

RNN -> 입력이 들어오면 이전 hidden state를 가지고 같이 계산이 되는 것입니다.

LSTM => g 구하는 것까진 같지만, 이것이 hidden state 구하는 중간과정 중 하나입니다.

여기서 g t를 구해놓고, 이것을 c t -1과 더합니다. 단순하게 더하기만 하여 쭉 그대로 c t+1 계산 될 때도 이것처럼 더하기 연산만 단순하게 존재합니다.

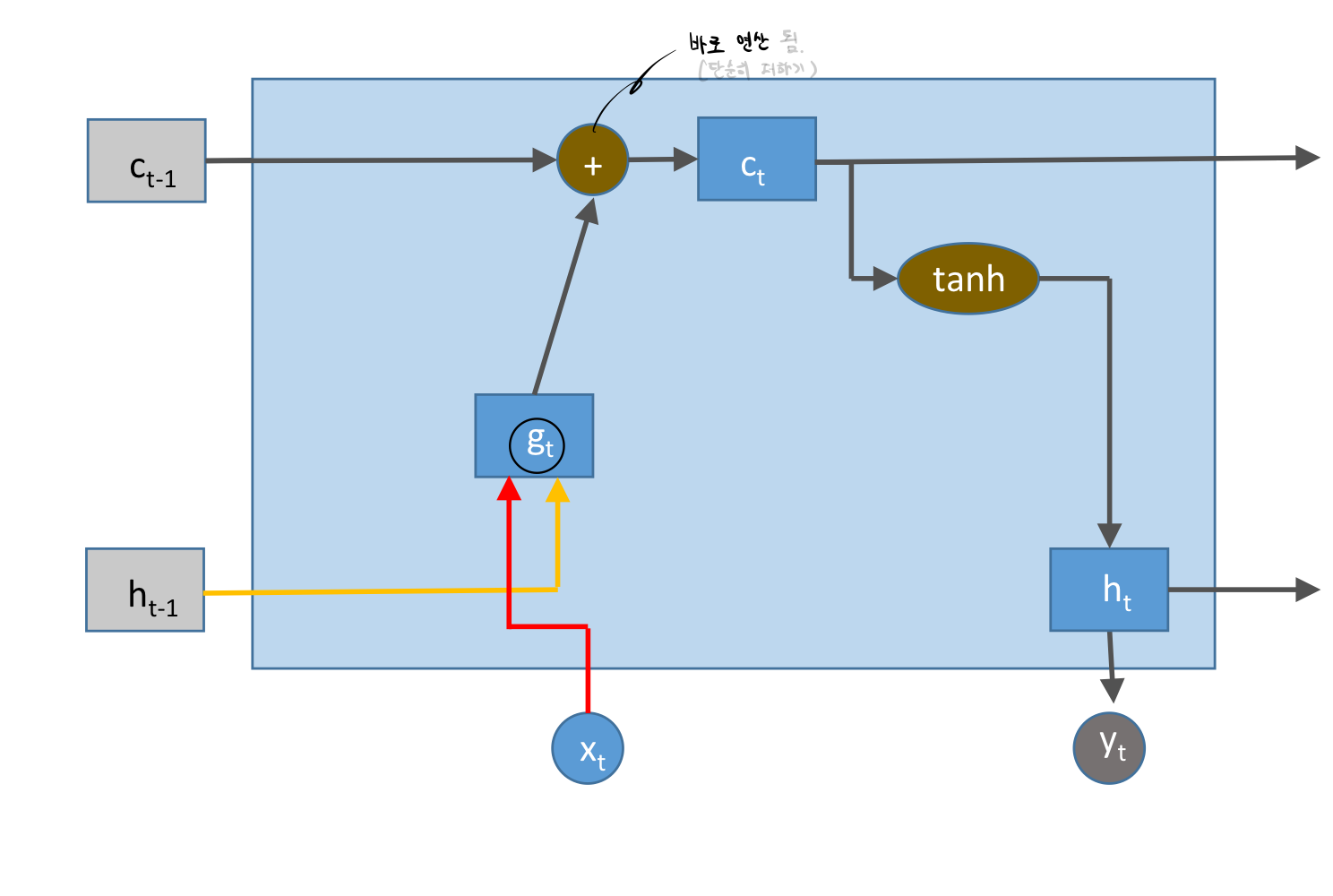

그런데, 여기서 조금 달라집니다.

얼마나 잊을 것인지를 계산하는 f t가 등장합니다.

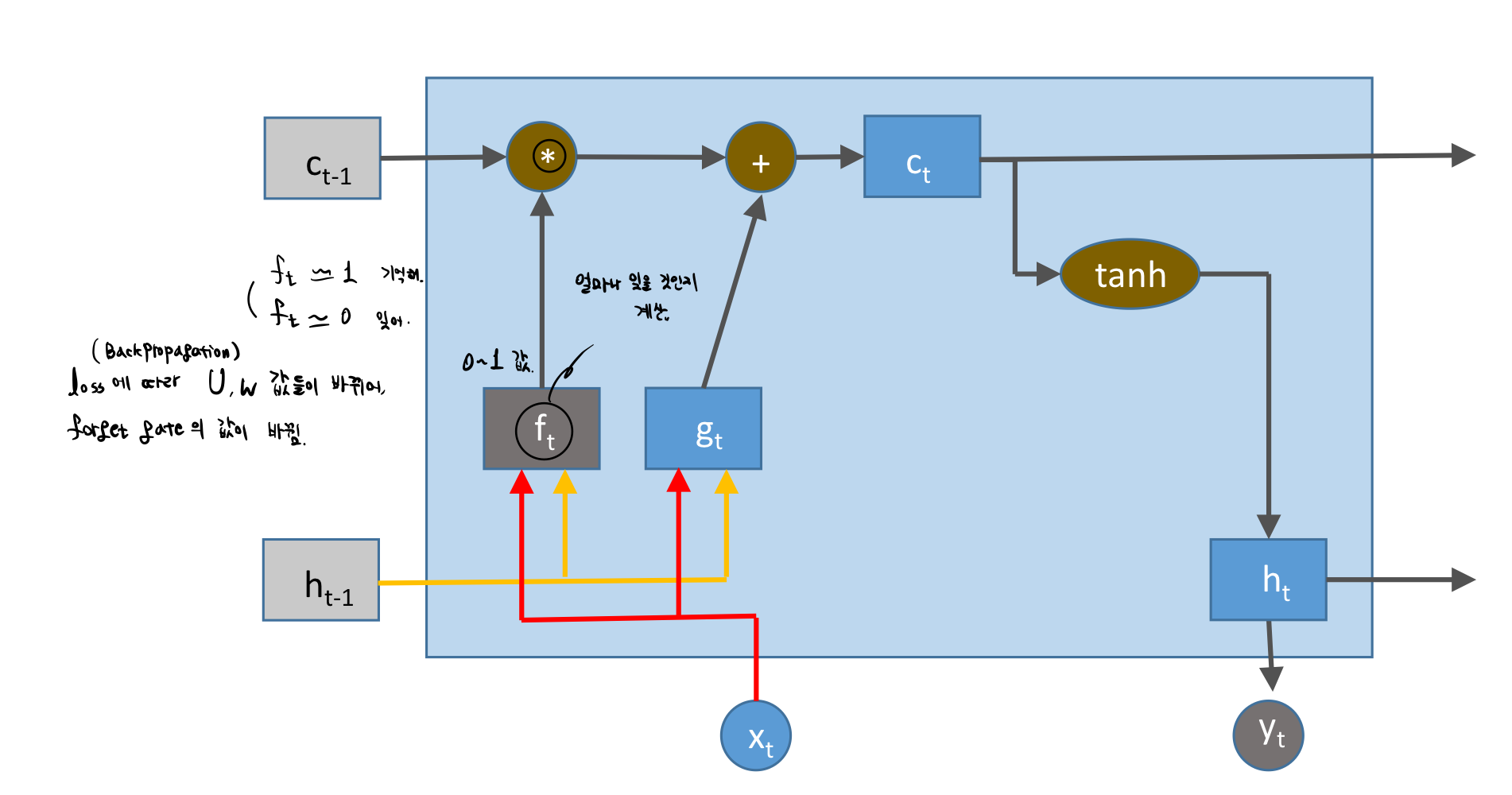

그런데, g t는 hidden state이고, i, f, o 는 sigmoid로 계산된 값이기 때문에, -1 ~ 1 사이의 값이 됩니다.

다시 그림으로 돌아와서,

이 값 f와 이전 hidden state인 c t-1과 곱하여 더하여 줍니다.

그렇다면, f t의 값이 0~ 1 사이의 값이라면, 다음과 같은 의미를 갖습니다.

- f t -> 1 => 기억해라

- f t -> 0 => 잊어라.

forget이 잘 되어야 한다고 하면, 잊으려고 하면 0에 가깝게, 기억해야하면 1에 가깝게 곱해주는 것입니다.

Backpropagation에서 loss에 따라 U, W 값들이 바뀌며 forget gate의 값이 바뀝니다.

g t는 현재 hidden state 인데, h t-1 과 x t를 가지고 얼마나 반영할 것인지를 결정합니다. 그렇게 곱해서 더해줍니다.

그런데, 여기서 최종적으로 구해진 c t를 가지고, 바로 쓰지 않고, c t를 가지고 이것을 얼마나 써야하나를 보는 것이 o t입니다.

이 값도 0~ 1의 값이므로 이것이 곱해져, h t를 완성시킵니다.

위와 같이 LSTM이 생겼습니다.

그렇다면 왜 쓰는지를 다시 짚고 넘어가겠습니다.

Why LSTM?

-> RNN 보다 더 Long-Term Dependency가 우수하다 정도로 알고 넘어가면 되겠습니다.

-> RNN 성능이 잘 안 나오면 LSTM을 시도해볼 수 있겠습니다.

RNN과 비슷한데, c t와 s t를 본다.

이것은 c t는 internal memory이고, s t는 hidden state이다. 각각 동작하는 방식 다르다.

- c t는 이전 state 얼마나 잊었나, 새로운 것을 얼마나 기억해야하나를 봅니다.

- s t는 최종적으로 계산된 것을 가지고 Output gate가 얼마나 반영이 되나.

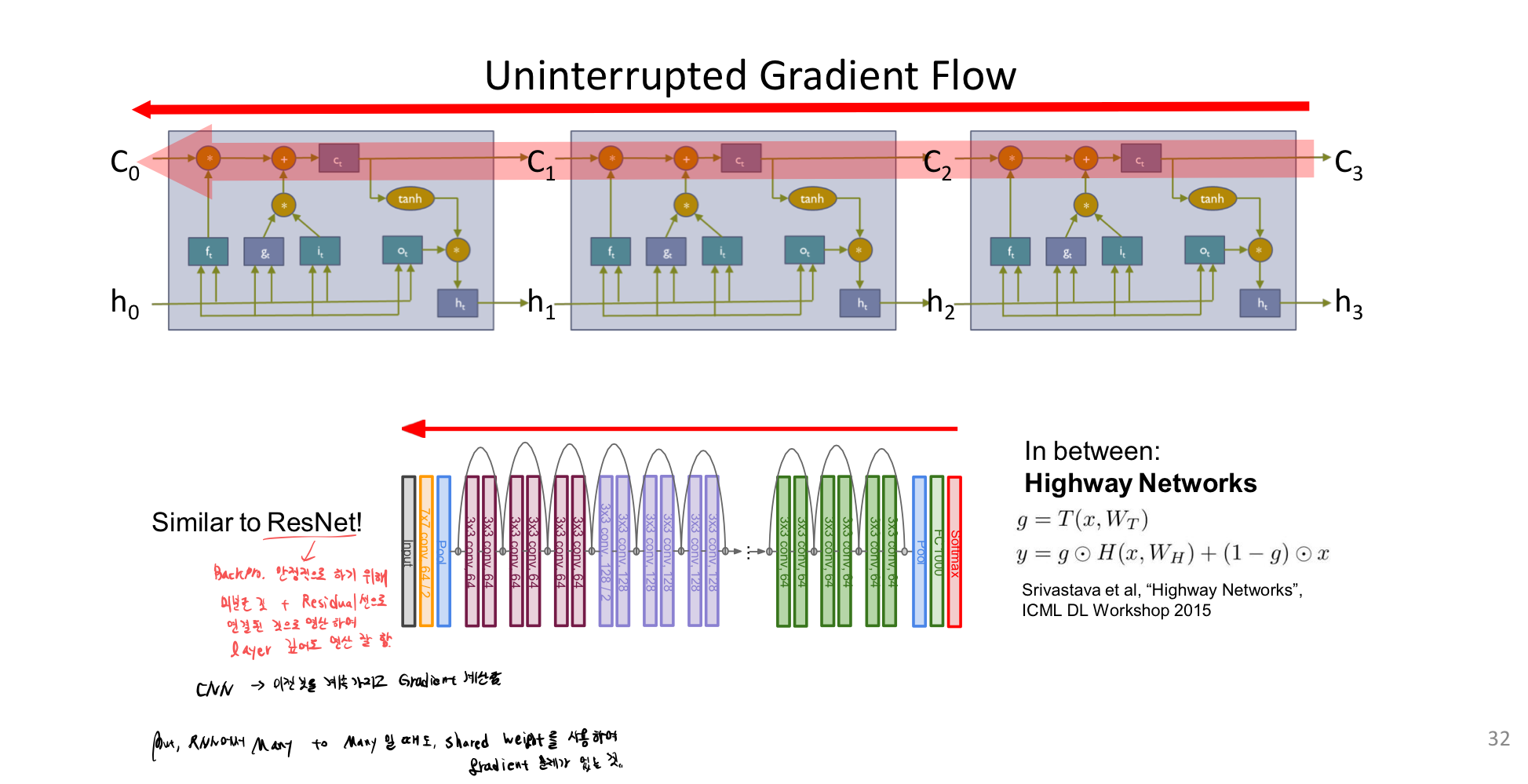

Long Term을 잘 하는 이유는 무엇일까요?

위와 같이 Backpropagation을 진행하기 때문입니다 !

이를 ResNet과 비교해봅시다.

안정적으로 Backpropagation하기 위해, 미분한 것에 Residual network 선으로 연결된 것들에게도 gradient를 연결하면 layer가 깊어도 gradient를 잘 연산할 수 있다는 장점이 있습니다.

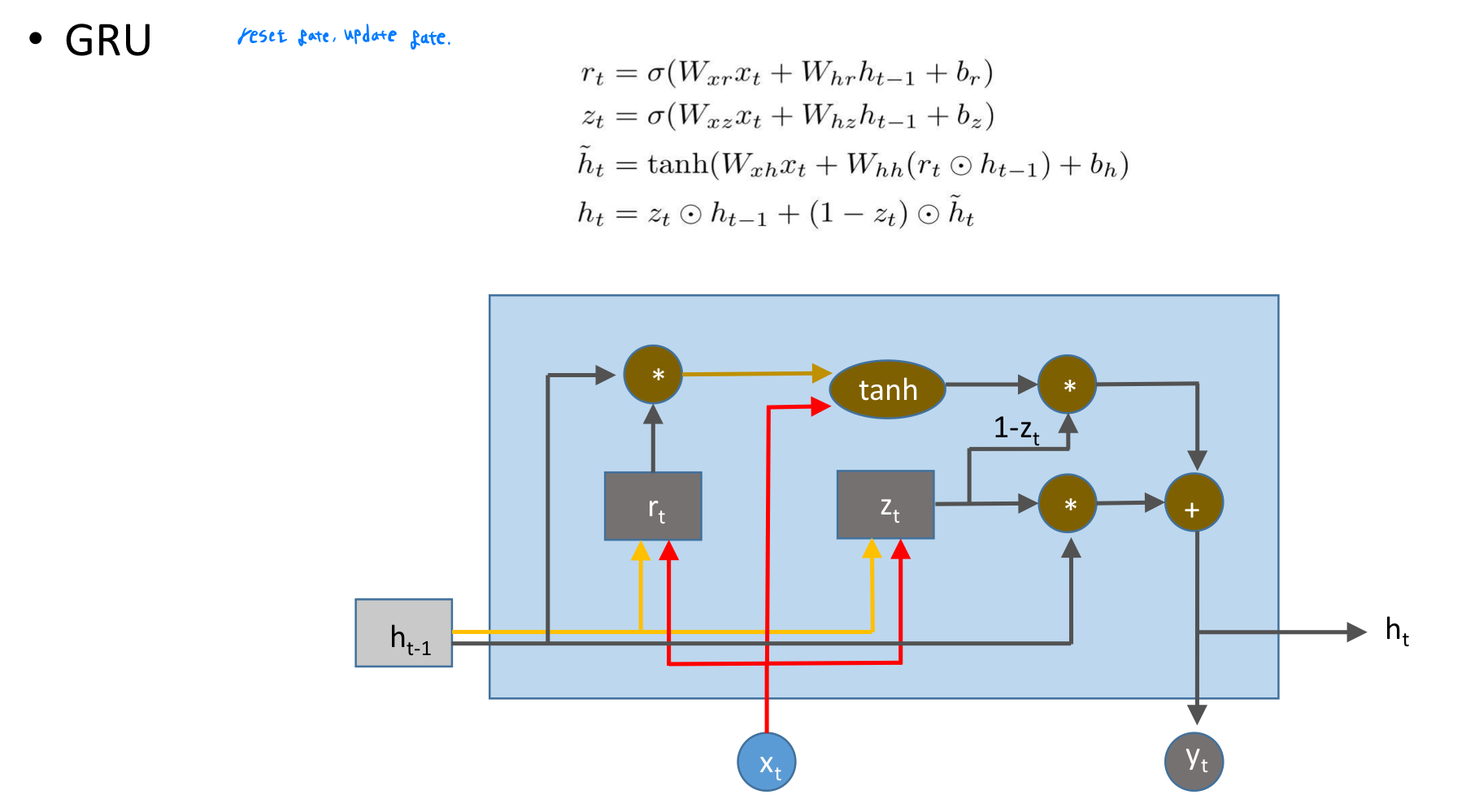

GRU도 비슷한 개념으로, reset gate와 update gate가 존재합니다.

결국 공통된 것은 현재 것에서 얼마나 update 시킬 것인가에 중점을 둡니다.

결국, RNN은 매번 같은 연산을 하며 앞의 것을 계속해서 까먹지만,

GRU, LSTM은 공통으로 덜 중요한 정보는 잘 넣지 않고, 중요한 것은 정보를 1에 가깝게 넣어 더 잘 기억하도록 합니다.

모든 sequence model은 sequence 제한이 필요합니다.

code 상 , max length 가 정해져 있습니다.

'Artificial Intelligence > Natural Language Processing' 카테고리의 다른 글

| [NLP] Attention (0) | 2023.04.12 |

|---|---|

| [NLP] Sequential Data Modeling (0) | 2023.04.10 |

| [NLP] RNN (0) | 2023.04.04 |

| [NLP] Word Embedding - GloVe [practice] (0) | 2023.03.31 |

| [NLP] Word Embedding - GloVe (0) | 2023.03.31 |