[Deep Neural Network] part 1 - 2

🧑🏻💻용어 정리

Deep Neural Network

multi-layer perceptron

sigmoid

MNIST

MSE error

logistic regression

Forward Propagation

- multi-layer perceptron에서 이 순차적인 계산과정을 나타내는 forward propatation

뉴런의 입력으로 주어지는 vector를 column vector로 만들고, 이 뉴런이 가지는 가중치를 row vector로 만들면 행열의 내적 형태로 가중합을 나타낼 수 있습니다.

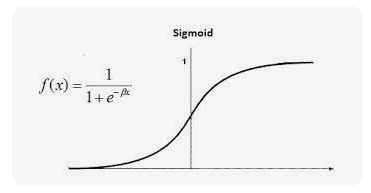

위와 같이 계산상에서 학습의 용이함을 필요로 하기 때문에, 이러한 활성 함수를 사용하게 됩니다.

위 Activation function은 sigmoid or logistic function이라고 불리게 됩니다.

위와 같이 선형 결합된 값이 Activation function을 통과하여 최종적인 Output 값을 만들어내게 되고,

위와 같은 discrete한 값이 출력인 Hard-Threshold function과 달리 위 sigmoid function은 0과 1 사이의 실수값을 출력으로 내어줍니다.

Output noded인 출력 뉴런의 개수에 해당하는 dimension을 가지는 열벡터가 나오게 됩니다.

이 열벡터 각각의 원소가 sigmoid function을 통과하게 되면 이런 Output 값을 얻어낼 수 있습니다.

아래와 같이 Linear Layer를 형성할 수 있습니다.

- MNIST Dataset (Modified National Institute of Standards and Technology)

- MNIST Classification Model

- 이 출력 vector를 ground truth 결과를 바탕으로 loss function을 만들어줄 수가 있게 됩니다.

- 실제 Prediction과 Target 간의 Squared Error 를 내어 loss를 정의할 수 있습니다.

- 이러한 loss를 Mean Squared Error Loss라합니다.

- class에서의 예측 값과 참 값의 차이가 작아, 학습에 사용되는 Gradient 값이 크지 않게 되어 학습이 상대적으로 느려질 수 있습니다.

- class 분류 시, 예측 값, 출력 값의 형태는, 확률의 값으로 총 1이 되도록하는 vector를 얻어야 합니다.

- 이를 softmax layer 혹은 softmax classifier라고 합니다.

- 먼저 출력 뉴런들의 값을 합이 1이 되도록 하기 위하여 다음과 같은 과정을 거친다.

- 출력 뉴런들의 각각의 값을 지수함수를 거치게 한다.

- 모두 양수인 형태의 출력 값을 가지고 상대적인 비율을 계산하게 된다.

- 각각의 확률 값을 구하여 합이 1이 되도록 구한다.

- 이러한 softmax layer의 Output vector를 multi-class classification task를 위한 형태로 얻었을 때, 여기에 Loss function을 적용할 때,

- MSE Loss는 0과 1로 값을 바꾸고 그 차이의 제곱을 환산하는 것으로 계산했다면,

- 대신에, softmax layer에 적용하는 Loss로서, softmax loss or cross-entropy loss를 사용합니다.

- 아래와 같이 출력 vector와 ground truth vector인 one-hot vector 형태로 주어집니다.

- 먼저 출력 뉴런들의 값을 합이 1이 되도록 하기 위하여 다음과 같은 과정을 거친다.

- 이를 softmax layer 혹은 softmax classifier라고 합니다.

'Artificial Intelligence > Deep Learning' 카테고리의 다른 글

| [Convolutional Neural Networks and Image Classification] Part 3 (0) | 2023.01.24 |

|---|---|

| [Training Neural Networks] part 2 (0) | 2023.01.24 |

| [Deep Neural Network] part 1 - 1 (0) | 2023.01.22 |

| [Machine Learning] 신경망 기초 1 (0) | 2023.01.17 |

| more clever function (0) | 2021.12.19 |